WEEK 12 TANDEM PROJECT UPDATE:

This has been a week of accelerated achievement on all fronts for TANDEM. Thanks to Steve, we have a working MVP hosted on www.dhtandem.com/tandem. Further, we have also made huge strides on the front end with Kelly’s robust initial set of HTML/CSS pages for the site. While the two ends are not tied together just yet, they are within sight as of this weekend. Jojo continues to surprise the group with her intuitive mix of outreach and awesome having sent out personalized invitations to key members in our contact list and people who have shown interest in the past few months. Keep reading for more detailed information about these and other developments.

DEVELOPMENT / DESIGN:

MVP functionality added this week includes:

- Ability to upload multiple files

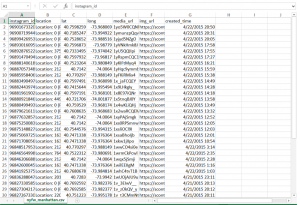

- Ability to persist data via a sqlite database containing project data and pointers to file locations

- backend analytic code connected to front end

- ability to zip and download results

Remaining tasks are:

- Implement polished UI

- Implement error handling

- Handle session management so that simultaneous users keep their data separate

- Look for opportunities to gain efficiency

- Correct a small bug in the opencv output

- Review security, backup file storage approaches and rework as needed to achieve best practices.

OUTREACH:

Continuing to garner community support, Jojo attended a GC Digital Initiatives event Tuesday as well as the English department’s Friday Forum. Additionally, initial invites for the launch went out to the digital fellows and DH Praxis friends and family via paperless post. Digital Fellow Ex Officio Micki Kaufman has already replied that she wouldn’t miss it. I’m now working to organize outreach with the other teams.

The press release is coming along on the class wiki, too!!

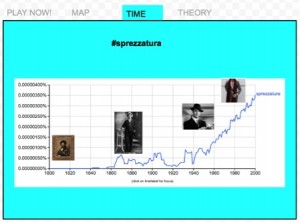

Corpus:

With functionality ironed out, we continue to work with the dataset we have generated via TANDEM for the Mother Goose corpus. As part of our release, we will include work that we have done in both analysis and data visualization for the initial test corpus. If you have questions or points of interest in Mother Goose feel free to comment them below! We are interested in hearing the kinds of questions one might ask of a text/image corpus.