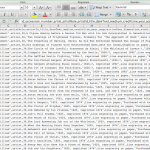

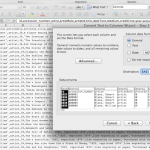

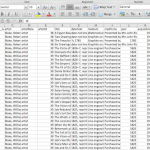

The code merge was completed and tested on two local machines and uploaded to the server at Reclaimhosting.com. According to Tim Owens at Reclaim, the necessary Python packages were loaded on the server, but the code cannot find three of them, so, as of this date, the code has not been run (Note: running this code on the server is an interim step to verify that the core logic of the text analysis and image analysis works properly). However, the server was built out so that the demonstration Django application launches successfully. Unfortunately, once it launches, some of the pages cause errors as does any attempt to write to the database. Our subject matter expert has been contacted to help debug these errors.

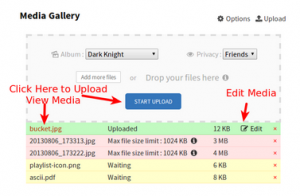

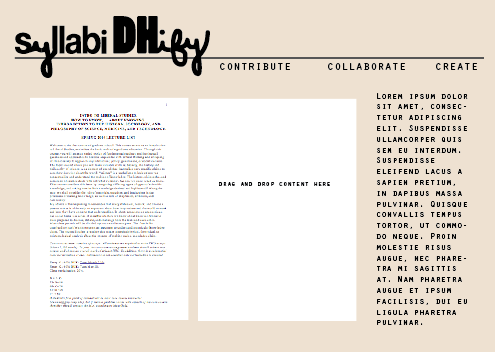

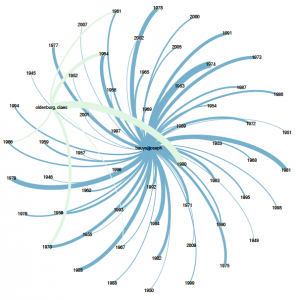

On a separate development path, multiple members of the team are working on building the Django components we need to turn the analytics engine into an interactive web application. Steve is working on linking the the core program to a template or view. Chris, Kelly and Jojo are working on designing and building the templates in a Django framework. Current UI/UX concerns involve potential upload sizes combined with processing time, button prompts that launch the analysis, and ways to convey best practice documentation so that it’s clear, concise, and that it facilitates proactive troubleshooting. The next part of this process will be to address the presentation of the final page, where the user is promoted to download their file. This page has great potential to be underwhelming, but there are some simple features we can apply to jazz it up, such as data visualization examples and by providing external links to next-step options.

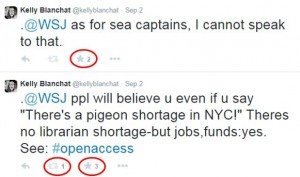

On the outreach front, Jojo went to a Django hacknight Wednesday to get a handle on people building Django apps. She made contact with several new advocates in addition to garnering further support from Django Girls participants web developers Nicole Dominguez and Jeri Rosenblum, as well as hacknight organizer Geoff Sechter. The new contacts include Michel Biezunski. He seems like he could help. And has used Django to upload and redistribute files for his app InstantPhotoAlbum. So he could help when we work on figuring out potential options for placing and giving back data.

Last but not least, Chris attended a meetup at DaniPad NYC Tech Coworking space in Queens, NY this past week. There, he met a handful of Python developers who had insight into working with Django based web-apps. Commercial uses for TANDEM-like were brainstormed and people responded with interest in testing a prototype. Along with academic beta-testers, some of these people will be included in the contact list when TANDEM is deployed.